From Bits to Insights: Navigating Database Data Structures

Peeling back the layers of storage and retrieval that power our data-driven adventures

Understanding the Basics

In the realm of relational databases, the organization revolves around tables, each with its set of columns (attributes) and rows (entries or records). These tables form the foundational structure for storing and managing data.

-- Creating a users table in SQL

CREATE TABLE Users ( ID INT PRIMARY KEY, Name VARCHAR(50), Age INT );

Table Retrieval with Indexing

in order to efficiently find the value for a particular key in the database, we need an index, indexes are a bit like a map guiding us to specific data points. These indexes contain keys and pointers, helping the database engine swiftly locate the desired information. Many databases allows you to add or remove index to maipulate query performance without affecting the database content.

-- creating an index for users table on column 'name'

CREATE INDEX idx_Users_Name ON Users (Name);

Indexing slows down the writes because every time we write data to a table, index needs to be updated. On the contrary, it is effective to have well chosen indexing for read heavy databases to speed up read queries.

Hash indexing and Bitcask

Hash indexing is like a super-quick detective that can find exactly what you're looking for in a massive data pile. Instead of searching one by one, it uses a special trick called a hash function with key-value pairs to jump right to the spot where your info is hiding.

#Hash Indexing Example in Python

hash_index = {}

def add_to_hash_index(key, value):

hash_index[key] = value

#Usage

add_to_hash_index("user123", {"name": "John Doe", "age": 30}

Just like that we can use hash indexes and it’s super fast for exact matches while writing and reading data.

This concept is heavily used by Bitcask- log-structured storage engine used in some distributed and NoSQL databases.

Bitcask is a log-structured merge-tree database engine optimized for fast writes. It is designed for key-value storage use cases that require high write throughput, and is used in some distributed databases and NoSQL data stores. It's designed for high-performance write-intensive workloads, providing efficient storage and retrieval of key-value pairs. Bitcask derives its name from its fundamental design principles: the use of a bitmapped hash index and sequential append-only log files.

/* Create bitmap index */

CREATE BITMAP INDEX book_category_bitmap_idx ON books (category);

#The index maps categories to book ids.

books = [("1", "Sci-fi"), ("2", "Mystery"), ("3", "Romance")]

def create_bitmap_index(rows, key):

index = {}

for row in rows:

key_val = row[key]

if key_val not in index:

index[key_val] = []

index[key_val].append(row[0])

return index

book_index = create_bitmap_index(books, 1)

print(book_index)

{'Sci-fi': ['1'], 'Mystery': ['2'], 'Romance': ['3']}

Here are some key characteristics and concepts associated with Bitcask:

Log-Structured Storage: Bitcask organizes data in an append-only log file, which means that new write operations are sequentially appended to the end of the log. This approach simplifies write operations and enhances durability.

Bitmapped Hash Index: To facilitate quick lookups and retrieval of data, Bitcask employs a hash index. This index is bitmapped, meaning it is a simple map of keys to their positions in the log. Hashing helps in distributing keys across the index, allowing for efficient access.

Efficient Reads: The combination of a sequential log and a hash index makes read operations efficient. The hash index enables direct access to the location of the data in the log, resulting in fast retrieval times.

Durability and Recovery: Bitcask's design enhances durability. In the event of a system failure or crash, the append-only log ensures that the data is not easily corrupted. Recovery involves replaying the log to reconstruct the database state, contributing to data integrity.

Write Speed: Bitcask excels in write-intensive scenarios. The append-only log allows for fast and sequential writes, making it suitable for applications with a high volume of write operations.

Key-Value Data Model: Bitcask is well-suited for a key-value data model. Each piece of data is associated with a unique key, and the hash index allows for quick access based on these keys.

Popular databases that have utilized Bitcask include Riak, a distributed NoSQL database. While Bitcask offers advantages for certain use cases, it may not be the optimal choice for all scenarios, especially those involving complex data relationships or extensive range queries. Understanding the specific needs of an application is crucial when choosing a storage engine like Bitcask.

🚀 Feature Article: "Navigating the Data Seas"SSTables and LSM-Trees

Let's delve into the foundational concepts of SSTables (Sorted String Tables) and LSM-Trees (Log-Structured Merge-Trees), essential structures that significantly impact the efficiency of modern databases. SSTables, acting as meticulous repositories, store data in a sorted manner, while LSM-Trees skillfully orchestrate SSTables to optimize both reads and writes. Constructed with precision, SSTables maintain their integrity through periodic compaction processes, ensuring organized data storage. LSM-Trees, on the other hand, elevate this organization by transforming SSTables into a dynamic, multi-tiered structure, effectively managing the flow of data.

Constructing and Maintaining SSTables

When constructing SSTables, data is methodically flushed from an in-memory structure, such as a memtable, to disk in a sequential and sorted fashion. This sequential organization not only simplifies write operations but also lays the groundwork for streamlined and efficient reads.

As data undergoes changes, updates, and deletions, SSTables, adhering to their immutable nature, don't modify in place. Instead, a new SSTable incorporating these modifications is created. This process ensures data consistency and durability. To prevent fragmentation and maintain optimal performance, periodic compaction processes come into play. These processes consolidate multiple SSTables into a more compact and efficient form, commonly referred to as segments in the database. Segments can be visualized as stacking multiple SSTables on top of each other when one runs out of memory.

Building an LSM-Tree from SSTables

Now, let's transition from SSTables to LSM-Trees. An LSM-Tree dynamically manages SSTables, creating a multi-tiered structure to handle the evolving data landscape. The process begins with a memtable, an in-memory structure that accumulates new writes. As the memtable grows, it is flushed to disk as a new SSTable.

In the LSM-Tree context, these SSTables become segments, representing different levels in the tree. Lower levels contain larger SSTables, while higher levels consist of smaller ones. The orchestration involves directing incoming writes to the memtable, periodically flushing it to disk, and organizing these SSTables into levels. The result is a dynamic and efficient structure where data is managed in a way that optimizes both write and read operations.

Optimizations Illuminated

Optimizing performance for LSM (Log-Structured Merge) Trees involves a combination of strategies aimed at reducing write amplification, minimizing disk I/O, and improving the overall efficiency of data storage and retrieval. Here are several key optimization techniques for LSM Trees:

Batch Writes: Instead of writing individual records one at a time, batch multiple writes together. This reduces the number of disk I/O operations and improves write throughput.

Compaction Settings: Compaction is the process of consolidating multiple smaller SSTables into a larger one. Tune the compaction settings to strike a balance between write and read amplification. Adjust parameters such as the size of SSTables, the frequency of compaction, and the compaction strategy (e.g., leveled compaction or size-tiered compaction) based on the workload characteristics and access patterns.

Write-Ahead Log (WAL) Optimization: LSM Trees typically use a Write-Ahead Log (WAL) to log write operations. Optimize the WAL settings, such as the size of WAL segments and the frequency of WAL flushing, to ensure efficient durability without compromising write performance.

Memtable Size and Flush Policies: Properly size the in-memory memtable to accommodate write loads without causing frequent flushes. Adjust flush policies to control when the data is written to disk. Finding the right balance between in-memory buffering and flushing to disk is critical for optimal performance.

Bloom Filters: Use Bloom Filters to reduce unnecessary disk reads during lookups. Bloom Filters are space-efficient data structures that quickly determine whether a key may exist in an SSTable. They help avoid unnecessary disk reads, especially during read operations.

It's essential to note that the effectiveness of these optimizations can vary based on specific use cases and workload characteristics. Therefore, continuous performance testing and tuning are crucial to maintaining an LSM Tree system at peak efficiency.

B-Trees

B-Trees, or Balanced Trees, are hierarchical data structures renowned for their balance between depth and branching. Their design enables efficient insertion, deletion, and search operations, making them a cornerstone in database management. B-Trees maintain balance by ensuring that all leaf nodes are at the same level, optimizing for consistent performance across various operations.

How B-Trees Operate

At the core of B-Trees lies a branching mechanism that facilitates efficient data retrieval. Each node in the tree contains a certain number of keys, dictating the branching factor. Nodes are organized in a hierarchical fashion, with each level representing a range of key values. This arrangement allows for logarithmic search times, ensuring swift access to data.

Strategies for Reliability and Optimization

Balancing Act: Ensure the B-Tree remains balanced to maintain its efficiency. Frequent insertions or deletions can disrupt balance, leading to performance degradation. Implement balancing strategies, such as tree rotations, to uphold the logarithmic time complexity.

Proper Node Size: Optimize the size of B-Tree nodes to strike a balance between memory efficiency and traversal speed. A well-chosen node size reduces the number of I/O operations during search, enhancing overall performance.

Caching Mechanisms: Leverage caching mechanisms to enhance B-Tree performance. Frequently accessed nodes can be cached in memory, reducing the need for disk reads during traversal. This strategy is particularly effective for read-heavy workloads.

Concurrency Control: Implement concurrency control mechanisms to handle simultaneous operations on the B-Tree. Techniques such as locking or optimistic concurrency control ensure data consistency and integrity in multi-user environments.

Range Queries: Exploit the inherent structure of B-Trees for efficient range queries. The ordered nature of keys allows for quick identification of ranges, making B-Trees well-suited for applications that involve range-based searches.

In data management, B-Trees play a foundational role, offering a blend of balance, efficiency, and reliability. As a data professional, grasping the nuances of B-Trees and their optimization strategies will empower you to navigate the complexities of database systems with confidence.

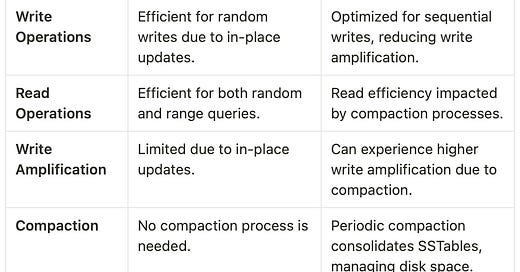

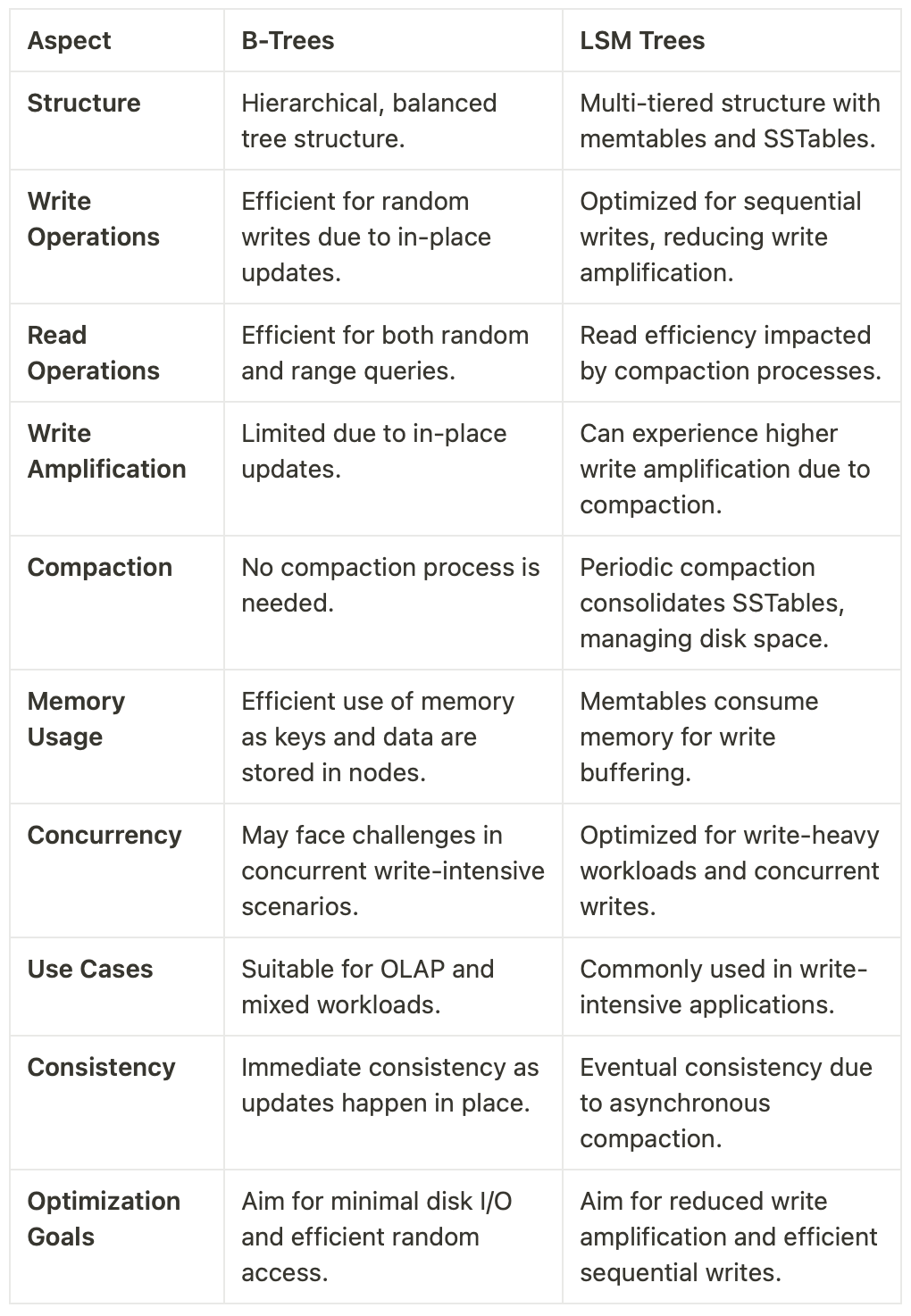

Let’s compare B-Trees and LSM-Trees and conclude our learnings

The choice between B-Trees and LSM Trees depends on factors such as the workload characteristics, the nature of queries, and optimization goals. B-Trees excel in scenarios with a balanced mix of reads and writes, while LSM Trees shine in write-intensive environments, offering efficient compaction and sequential write optimizations.

I hope this article helps you decide optimization strategies for your next data projects, subscribe for more great concepts.